What is CatBoost?

CatBoost is an open-source gradient boosting library developed by Yandex, designed to handle categorical features efficiently.1 Unlike traditional gradient boosting methods, CatBoost processes categorical data directly without extensive preprocessing, making it particularly effective for datasets with categorical variables.2

Key features of CatBoost include:

- Native Handling of Categorical Features: CatBoost can process non-numeric data directly, eliminating the need for manual encoding.

- Ordered Boosting: This technique reduces overfitting by using a permutation-driven approach, ensuring more accurate predictions.3

- Symmetric Trees: CatBoost builds balanced trees, which enhances training speed and model efficiency.4

- GPU Support: The library supports GPU acceleration, allowing for faster training on large datasets.

CatBoost is versatile and has been applied across various industries. For instance, JetBrains utilizes it for code completion, Cloudflare employs it for bot detection, and Careem uses it to predict future ride destinations.5

Overall, CatBoost offers a robust solution for machine learning tasks, especially when dealing with categorical data, providing high accuracy and efficiency with minimal data preprocessing.

How does it operate?

CatBoost operates within a gradient boosting framework, similar to algorithms like LightGBM and XGBoost. It constructs multiple decision trees in a sequential manner, where each subsequent tree is designed to correct the errors of its predecessors. This iterative process enhances the model’s accuracy over time. The final prediction is obtained by aggregating the weighted outputs of all individual trees.6

A distinctive feature of CatBoost is its ability to handle categorical features natively, eliminating the need for extensive preprocessing. This is achieved through a technique known as ordered boosting, which mitigates overfitting by preventing target leakage during training.

Additionally, CatBoost employs symmetric trees, where splits occur simultaneously across all nodes at each level of the decision tree. This structure facilitates faster prediction times and reduces the risk of overfitting.

CatBoost vs XGBoost and LightGBM

| Aspect | CatBoost | LightGBM | XGBoost |

|---|---|---|---|

| Categorical features handling | Equipped with automatic encoding and ordered boosting for handling categorical variables. | Requires manual encoding processing such as one-hot encoding, label encoding, etc. | Requires manual encoding processing such as one-hot encoding, label encoding, etc. |

| Decision Tree Structure | It has symmetric decision trees, which are balanced and grow evenly. They ensure faster predictions and a lower risk of overfitting. | It has a Leaf-wise growth strategy (asymmetric) which focuses on the leaves with the highest loss. This results in deep and imbalanced trees which can carry a higher accuracy but, a greater risk of overfitting. | It has a Level-wise growth strategy (asymmetric) which grows the tree based on the best split for each node. This leads to flexible but slower predictions and a potential risk of overfitting. |

| Model accuracy | They provide good accuracy when working with datasets containing many categorical features due to ordered boosting and reduced risk of overfitting on smaller data. | They provide good accuracy, particularly on large and high-dimensional datasets since the leaf-wise growth strategy focuses on improving performance in areas of high error. | They provide good accuracy on most datasets but, tend to be outperformed by CatBoost on categorical datasets and LightGBM on very large datasets due to its less aggressive tree-growing strategy. |

| Training speed & accuracy | Usually slower to train than LightGBM but more efficient on small to medium datasets, especially when categorical features are involved. | Usually the fastest of these three, especially on large datasets due to its leaf-wise tree growth which is more efficient in high-dimensional data. | Often the slowest of these three by a tiny margin. It is very efficient for large datasets. |

Deploying the CatBoost Model

To implement a CatBoost model for predicting trading signals (Buy/Sell), we first need to define our problem scenario. Our dataset comprises continuous features—Open, High, Low, and Close prices (OHLC)—and categorical features such as Day (current date), Day of the Week (Monday to Sunday), Day of the Year (1 to 365), Month (January to December), Hour (0 to 23), and Minute (0 to 59). The OHLC values are continuous, while the others are categorical.

The dataset “EURUSD_H1.csv” is pulled in real time using Python and MetaTrader 5, and you can find a downloadable copy in the Files section at the end of the article.

Install and import necessary libraries

We start by importing the CatBoost model after installing it.

pip install catboostimport sys

import numpy as np

import pandas as pd

from stockstats import wrap # https://github.com/jealous/stockstats

import catboost

from catboost import CatBoostClassifier

import sklearn

from sklearn.pipeline import Pipeline

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

import seaborn as sns

import matplotlib.pyplot as plt

from datetime import datetime

import csv

sns.set_style("darkgrid")

forex_file = "/mnt/c/Users/matsl/Python/Forex/EURUSD_H1.csv"

onnx_file = "/mnt/c/Users/matsl/AppData/Roaming/MetaQuotes/Terminal/930119AA53207C87xxxxxxxxxxxxxxxx/MQL5/Files/CatBoost.EURUSD.OHLC.H1.onnx"I’m using the Stockstats wrapper by Cedric Zhuang7 for handy inline stock statistics/indicators support.

print('Python version:{}'.format(sys.version))

print('Numpy version:{}'.format(np.__version__))

print('Pandas version:{}'.format(pd.__version__))

print('CatBoost version:{}'.format(catboost.__version__))

print('Sci-Kit Learn version:{}'.format(sklearn.__version__))Python version:3.12.3 (main, Nov 6 2024, 18:32:19) [GCC 13.2.0]

Numpy version:1.26.4

Pandas version:2.2.3

CatBoost version:1.2.7

Sci-Kit Learn version:1.5.2Importing the data

Reading the csv and modifying the dataset to suit our needs.

eurusd_h1 = pd.read_csv(forex_file, index_col=0, parse_dates=True, skipinitialspace=True)

eurusd_h1.rename(columns={'Open': 'open'}, inplace=True)

eurusd_h1.rename(columns={'High': 'high'}, inplace=True)

eurusd_h1.rename(columns={'Low': 'low'}, inplace=True)

eurusd_h1.rename(columns={'Close': 'close'}, inplace=True)

eurusd_h1.rename(columns={'Tick Volume': 'volume'}, inplace=True)

eurusd_h1.drop('Spread', axis='columns', inplace=True)

eurusd_h1.drop('Real Volume', axis='columns', inplace=True)

df = pd.DataFrame(eurusd_h1)

df = wrap(df)

df.head(4) open high low close volume

Time

2023-09-29 07:00:00 1.05755 1.05814 1.05751 1.05801 457

2023-09-29 08:00:00 1.05801 1.05861 1.05757 1.05765 1004

2023-09-29 09:00:00 1.05766 1.05920 1.05743 1.05901 3349

2023-09-29 10:00:00 1.05899 1.06107 1.05881 1.06064 3762

2023-09-29 11:00:00 1.06066 1.06166 1.05994 1.06095 3847# Add technical indicators

df['rsi'] = df['rsi']

df['stochrsi'] = df['stochrsi']

df['atr'] = df['atr']

# Add date and time features

df['day'] = df.index.day

df['day_of_week'] = df.index.dayofweek

df['day_of_year'] = df.index.dayofyear

df['month'] = df.index.month

df['hour'] = df.index.hour

df['minute'] = df.index.minute

# Tidy up the dataframe

df.dropna(inplace=True)

# Ensure all category columns are integers

df = df.astype({'day': 'int', 'day_of_week': 'int', 'day_of_year': 'int', 'month': 'int', 'hour': 'int', 'minute': 'int'})df.head() open high low close volume rsi stochrsi atr day day_of_week day_of_year month hour minute

Time

2023-09-29 09:00:00 1.05766 1.05920 1.05743 1.05901 3349 80.269815 100.000000 0.001175 29 4 272 9 9 0

2023-09-29 10:00:00 1.05899 1.06107 1.05881 1.06064 3762 90.309633 100.000000 0.001477 29 4 272 9 10 0

2023-09-29 11:00:00 1.06066 1.06166 1.05994 1.06095 3847 91.224247 100.000000 0.001533 29 4 272 9 11 0

2023-09-29 12:00:00 1.06082 1.06171 1.06040 1.06144 2966 92.439019 100.000000 0.001489 29 4 272 9 12 0

2023-09-29 13:00:00 1.06144 1.06164 1.06066 1.06091 2274 79.603662 86.114784 0.001399 29 4 272 9 13 0Creating the signals for training

We shift the ‘close’ and ‘open’ columns by one row to get the future close and open price values, then we add these new columns to the dataset.

new_df = df.copy()

new_df["target_close"] = df["close"].shift(-1)

new_df["target_open"] = df["open"].shift(-1)

new_df = new_df.dropna()

new_df = new_df.reset_index(drop=True)

open_values = new_df["target_open"]

close_values = new_df["target_close"]

target = []

for i in range(len(open_values)):

if close_values[i] > open_values[i]:

target.append(1)

else:

target.append(0)

new_df["signal"] = target

print(new_df.shape)

new_df.head()(8112, 17)

open high low close volume rsi stochrsi atr day day_of_week day_of_year month hour minute target_close target_open signal

0 1.05766 1.05920 1.05743 1.05901 3349 80.269815 100.000000 0.001175 29 4 272 9 9 0 1.06064 1.05899 1

1 1.05899 1.06107 1.05881 1.06064 3762 90.309633 100.000000 0.001477 29 4 272 9 10 0 1.06095 1.06066 1

2 1.06066 1.06166 1.05994 1.06095 3847 91.224247 100.000000 0.001533 29 4 272 9 11 0 1.06144 1.06082 1

3 1.06082 1.06171 1.06040 1.06144 2966 92.439019 100.000000 0.001489 29 4 272 9 12 0 1.06091 1.06144 0

4 1.06144 1.06164 1.06066 1.06091 2274 79.603662 86.114784 0.001399 29 4 272 9 13 0 1.05943 1.06092 0Splitting the data

With the signals ready for prediction, let’s split the data into training and testing samples.

X = new_df.drop(columns = ["target_close", "target_open", "signal"]) # we drop future values

y = new_df["signal"] # trading signals are the target variables we wanna predict

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.8, random_state=42)We create a list of categorical features available in our dataset.

categorical_features = ["day","day_of_week", "day_of_year", "month", "hour", "minute"]We can then use this list to convert the categorical features into string format, reflecting how categorical variables are typically stored.

X_train[categorical_features] = X_train[categorical_features].astype(str)

X_test[categorical_features] = X_test[categorical_features].astype(str)

X_train.info()<class 'pandas.core.frame.DataFrame'>

Index: 6489 entries, 7493 to 7270

Data columns (total 14 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 open 6489 non-null float64

1 high 6489 non-null float64

2 low 6489 non-null float64

3 close 6489 non-null float64

4 volume 6489 non-null int64

5 rsi 6489 non-null float64

6 stochrsi 6489 non-null float64

7 atr 6489 non-null float64

8 day 6489 non-null object

9 day_of_week 6489 non-null object

10 day_of_year 6489 non-null object

11 month 6489 non-null object

12 hour 6489 non-null object

13 minute 6489 non-null object

dtypes: float64(7), int64(1), object(6)

memory usage: 760.4+ KBTraining a CatBoost Model

Before using the fit method to train the CatBoost model, let’s explore some of the key parameters8 that drive its functionality.

| Parameter | Description |

|---|---|

| Iterations | This is the number of decision trees iterations to build. More iterations lead to better performance but also carry a risk of overfitting. |

| learning_rate | This factor controls the distribution of each tree to the final prediction. A smaller learning rate requires more iterations for trees to converge but often results in better models. |

| depth | This is the maximum depth of the trees. Deeper trees can capture more complex patterns in the data but, may often lead to overfitting. |

| cat_features | This is a list of categorical indices. Despite the CatBoost model being capable of detecting the categorical features, it is a good practice to explicitly instruct the model on which features are categorical ones. This helps the model understand the categorical features from a human perspective as the methods for automatically detecting the categorical variables can sometimes fail. |

| l2_leaf_reg | This is the L2 regularization coefficient. It helps to prevent overfitting by penalizing larger leaf weights. |

| border_count | This is the number of splits for each categorical feature. The higher this number the better the performance and increases computational time. |

| eval_metric | This is the evaluation metric that will be used during training. It helps in monitoring the model performance effectively. |

| early_stopping_rounds | When validation data is provided to the model, the training progress will stop if no improvement in the model’s accuracy is observed for this number of rounds. This parameter helps reduce overfitting and can save a lot of training time. |

Let’s define a dictionary for the above parameters.

params = dict(

task_type='GPU',

iterations=300, # Number of boosting iterations

#learning_rate=0.01, # The automatically defined value should be close to the optimal one.

depth=12, # Depth of the tree

feature_border_type='MinEntropy', # The method used to calculate the border between the categories

l2_leaf_reg=12, # L2 regularization coefficient

loss_function='Logloss', # Loss function to be optimized

bagging_temperature=1, # Controls intensity of Bayesian bagging

border_count=128, # Number of splits for categorical features

eval_metric='Logloss', # Metrics for validation data

random_seed=42, # Seed for reproducibility

verbose=1, # Verbosity level

early_stopping_rounds=10 # Early stopping for validation

)Finally, we define the CatBoost model within the Sklearn pipeline and call the fit method to train it, providing evaluation data and a list of categorical features.

pipe = Pipeline([

("catboost", CatBoostClassifier(**params))

])

# Fit the pipeline to the training data

pipe.fit(X_train, y_train, catboost__eval_set=(X_test, y_test), catboost__cat_features=categorical_features)0: learn: 0.6906250 test: 0.6928095 best: 0.6928095 (0) total: 240ms remaining: 1m 11s

1: learn: 0.6884363 test: 0.6929242 best: 0.6928095 (0) total: 463ms remaining: 1m 8s

2: learn: 0.6858044 test: 0.6926241 best: 0.6926241 (2) total: 693ms remaining: 1m 8s

3: learn: 0.6840154 test: 0.6923136 best: 0.6923136 (3) total: 915ms remaining: 1m 7s

4: learn: 0.6821880 test: 0.6922213 best: 0.6922213 (4) total: 1.14s remaining: 1m 7s

5: learn: 0.6800458 test: 0.6919058 best: 0.6919058 (5) total: 1.38s remaining: 1m 7s

6: learn: 0.6787011 test: 0.6914997 best: 0.6914997 (6) total: 1.59s remaining: 1m 6s

7: learn: 0.6766485 test: 0.6915836 best: 0.6914997 (6) total: 1.83s remaining: 1m 6s

8: learn: 0.6752735 test: 0.6913356 best: 0.6913356 (8) total: 2.05s remaining: 1m 6s

9: learn: 0.6728656 test: 0.6910021 best: 0.6910021 (9) total: 2.27s remaining: 1m 5s

10: learn: 0.6712232 test: 0.6906219 best: 0.6906219 (10) total: 2.49s remaining: 1m 5s

11: learn: 0.6688273 test: 0.6903468 best: 0.6903468 (11) total: 2.71s remaining: 1m 4s

12: learn: 0.6660441 test: 0.6905106 best: 0.6903468 (11) total: 2.94s remaining: 1m 4s

13: learn: 0.6645466 test: 0.6903318 best: 0.6903318 (13) total: 3.16s remaining: 1m 4s

14: learn: 0.6629403 test: 0.6906848 best: 0.6903318 (13) total: 3.37s remaining: 1m 4s

15: learn: 0.6604999 test: 0.6903479 best: 0.6903318 (13) total: 3.61s remaining: 1m 4s

16: learn: 0.6587366 test: 0.6902117 best: 0.6902117 (16) total: 3.82s remaining: 1m 3s

17: learn: 0.6576030 test: 0.6901320 best: 0.6901320 (17) total: 4.04s remaining: 1m 3s

18: learn: 0.6554191 test: 0.6898560 best: 0.6898560 (18) total: 4.28s remaining: 1m 3s

19: learn: 0.6543412 test: 0.6895462 best: 0.6895462 (19) total: 4.51s remaining: 1m 3s

20: learn: 0.6517582 test: 0.6893069 best: 0.6893069 (20) total: 4.75s remaining: 1m 3s

21: learn: 0.6500230 test: 0.6891939 best: 0.6891939 (21) total: 4.98s remaining: 1m 2s

22: learn: 0.6475220 test: 0.6890455 best: 0.6890455 (22) total: 5.21s remaining: 1m 2s

23: learn: 0.6465565 test: 0.6887497 best: 0.6887497 (23) total: 5.46s remaining: 1m 2s

24: learn: 0.6440090 test: 0.6885688 best: 0.6885688 (24) total: 5.7s remaining: 1m 2s

...

80: learn: 0.5550032 test: 0.6859373 best: 0.6854023 (70) total: 18.3s remaining: 49.6s

bestTest = 0.6854023161

bestIteration = 70

Shrink model to first 71 iterations.Evaluating the Model

We can evaluate the model’s performance using Sklearn’s metrics.

# Make predicitons on training and testing sets

y_train_pred = pipe.predict(X_train)

y_test_pred = pipe.predict(X_test)

# Training set evaluation

print("Training Set Classification Report:")

print(classification_report(y_train, y_train_pred))

# Testing set evaluation

print("\nTesting Set Classification Report:")

print(classification_report(y_test, y_test_pred))Training Set Classification Report:

precision recall f1-score support

0 0.70 0.73 0.72 3162

1 0.74 0.70 0.72 3327

accuracy 0.72 6489

macro avg 0.72 0.72 0.72 6489

weighted avg 0.72 0.72 0.72 6489

Testing Set Classification Report:

precision recall f1-score support

0 0.53 0.52 0.52 805

1 0.53 0.54 0.54 818

accuracy 0.53 1623

macro avg 0.53 0.53 0.53 1623

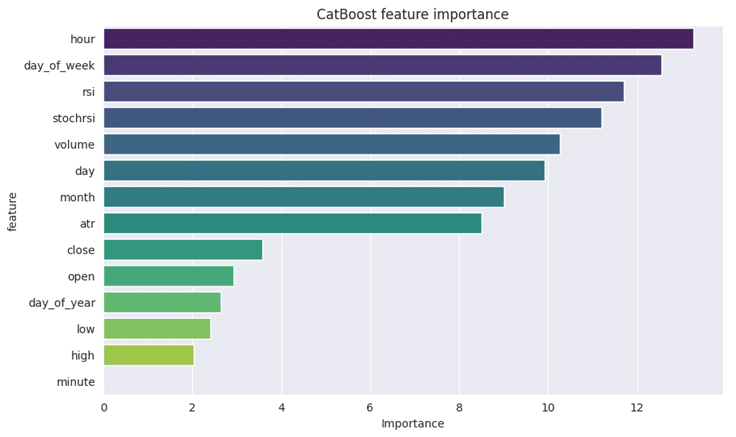

weighted avg 0.53 0.53 0.53 1623To gain a deeper understanding of the model, let’s create a feature importance plot.

# Extract the trained CatBoostClassifier from the pipeline

catboost_model = pipe.named_steps['catboost']

# Get feature importances

feature_importances = catboost_model.get_feature_importance()

feature_im_df = pd.DataFrame({

"feature": X.columns,

"importance": feature_importances

})

feature_im_df = feature_im_df.sort_values(by="importance", ascending=False)

plt.figure(figsize=(10, 6))

sns.barplot(data = feature_im_df, x='importance', y='feature', palette="viridis")

plt.title("CatBoost feature importance")

plt.xlabel("Importance")

plt.ylabel("feature")

plt.show()

The “feature importance plot” above provides a clear overview of how the model made its decisions. It appears that the CatBoost model prioritized categorical variables as the most influential features in determining the final predictions, more so than the continuous variables.

In Part 2, I will demonstrate how to save the model in ONNX format and integrate it into an Expert Advisor in MetaTrader 5 for automated trading powered by the CatBoost AI.

Files

References

- CatBoost – open-source gradient boosting library ↩︎

- Introduktion till CatBoost ↩︎

- CatBoost: unbiased boosting with categorical features ↩︎

- CatBoost in Machine Learning: A Detailed Guide ↩︎

- CatBoost – Wikipedia ↩︎

- What Is CatBoost? (Definition, How Does It Work?) | Built In ↩︎

- Stock Statistics/Indicators Calculation Helper ↩︎

- Common parameters – CatBoost ↩︎

Leave a Reply