DeepSeek’s AI power directly in your coding environment—no connectivity required.

Learn how to integrate Ollama, a local platform for executing open-source models, with Cursor IDE. Cursor IDE is an AI-enhanced editor that enables smooth pair programming and includes sophisticated coding features comparable to Visual Studio Code. However, its AI functionality is typically based on web services.

We will completely ignore these constraints. By installing a locally hosted DeepSeek Coder V2 model, you’ll get:

- Complete control over your AI tools.

- No subscription fees

- Complete offline functionality.

I’ll walk you through the process so you can begin using AI to assist you with your projects now. Prepare to make your code smarter, faster, and free of unwanted charges.

Whether you’re constructing a personal website or enterprise software, this solution delivers economical, private AI.

Let’s dive in.

1. Install Ollama and Cursor

Download and set up the tools that power offline AI coding

Ollama

- What it does: It is a free, open-source platform that works locally without the need for a cloud to run, modify, and implement AI models like DeepSeek.

- Why it matters: You can avoid internet dependency and API fees by hosting models locally on your computer.

- Download: ollama.com

Cursor

- What it does: AI pair programming, error detection, code autocompletion, and other AI-powered IDE capabilities. Imagine it as a more intelligent, AI-enhanced version of Visual Studio Code.

- The catch: Cursor is by default dependent on cloud-based AI, which necessitates expensive API credentials and continuous internet. This will be fixed by connecting it to the local models in Ollama.

- Download: cursor.sh

Why this combo works

No subscriptions: Avoid recurring API costs.

Offline freedom: Code without internet.

Privacy-first: Your data stays on your device.

Install both tools, then meet me in Step 2 to link them and enable AI-driven coding—without charges and with your data securely stored on your computer.

2. Download the LLM model

Ollama simplifies the process of downloading and sharing LLMs, making it incredibly convenient.

To download, simply type the command below:

ollama pull deepseek-coder-v2To try it out simply type:

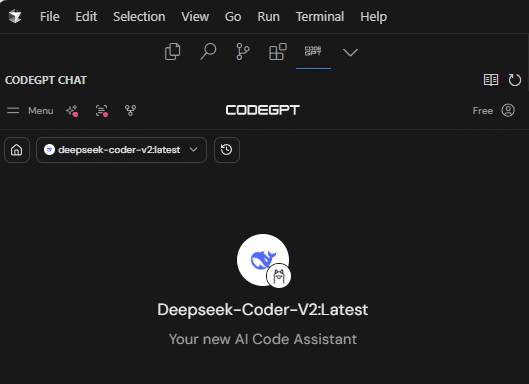

ollama run deepseek-coder-v23. Integrate CodeGPT with Cursor IDE

CodeGPT by DanielSanMedium is the critical extension that bridges Cursor IDE with locally hosted AI models like DeepSeek Coder V2 via Ollama. Unlike cloud-dependent solutions, this setup ensures private, offline access to advanced coding assistance without relying on external APIs or subscriptions.

If the Cursor marketplace encounters issues, follow this manual method:

- Download the CodeGPT Extension: Obtain the

.vsixfile from trusted sources like GitHub or official documentation. - Drag and Drop: Open Cursor IDE and drag the downloaded file directly into the editor window to install.

For detailed instructions, refer to the guide: How to Install Extensions on Cursor.

With CodeGPT and DeepSeek Coder V2 integrated, you gain direct access to AI-powered code suggestions, error detection, and contextual debugging—all running locally on your machine. This eliminates latency, privacy concerns, and recurring costs associated with cloud-based tools.

4. Configure CodeGPT for DeepSeek Coder V2

With CodeGPT installed, the final step is configuring it to prioritize DeepSeek Coder V2, ensuring your coding environment leverages this specialized AI model through Ollama’s local server.

- Access Settings: Select CodeGPT in the activity bar.

- Select Ollama as Provider: Navigate to the AI provider options and choose “Ollama” to enable local model integration.

- Assign DeepSeek Coder V2: From the model dropdown, select

deepseek-coder-v2—the coding-optimized LLM you installed earlier.

Now that your AI setup is complete, experiment with DeepSeek Coder V2’s capabilities: test its code suggestions, refactor complex logic, or troubleshoot errors in real time. Customize its behavior through prompt engineering or adjust temperature settings in CodeGPT to fine-tune responses for your workflow.

Why This Matters

Configuring CodeGPT to use DeepSeek Coder V2 unlocks precise, context-aware code generation, error diagnostics, and logic optimization directly in your IDE. By running locally, it eliminates cloud latency, ensures data privacy, and operates independently of internet connectivity.

Final Words: Why CodeGPT and DeepSeek Coder V2?

CodeGPT was selected for this guide due to its seamless compatibility with DeepSeek Coder V2, offering a stable and well-maintained interface for integrating locally hosted AI models into Cursor IDE. Its reliability and active developer community ensure consistent performance, making it an ideal choice for unlocking the full potential of DeepSeek Coder V2—a model specifically engineered for precision in code generation, debugging, and technical problem-solving.

By focusing on DeepSeek Coder V2, you’ve equipped yourself with a tool designed to elevate coding efficiency, accuracy, and innovation—all while retaining full control over your data and infrastructure.

Leave a Reply